Dynamic Visual SLAM Project

Introduction

🌞 Aims:

- Addressing the impact of highly dynamic objects on SLAM system performance.

- Solving the problem of unknown dynamic object recognition when semantic segmentation network fails.

- Overcoming the limitation of SLAM systems in generating dense point cloud maps under dynamic scenes.

📝 Advisor: Prof. Yifei Wu

📅 Duration: Mar. 2024 - present

Contributions

- Embedded the ANN semantic segmentation network into ORB-SLAM2 by leveraging ROS framework to identify common dynamic objects.

- Proposed an unknown dynamic object recognition algorithm combining depth map clustering and multi-view geometry, enabling accurate dynamic object recognition when the semantic segmentation network fails.

- Designed a strategy to remove dynamic features using semantic information and dynamic depth clusters, improving localization accuracy and map quality.

- Developed a static point cloud map creating thread to construct high-quality maps in dynamic environments.

Conclusion

- Localization: Experimental results on TUM and Bonn datasets show top or second accuracy compared to most mainstream dynamic SLAM algorithms. Over 90% improvement in localization accuracy on TUM dataset versus ORB-SLAM2, with minimum ATE of 0.0072m. Accuracy improved up to 70% compared to semantic-only methods.

- Map creation: The proposed system is capable of constructing high-quality static dense point cloud maps in dynamic environments.

Outcomes

- A complete novel RGB-D visual SLAM system with improved accuracy, robustness, and environmental awareness in highly dynamic scenarios.

- A journal-type paper. (IEEE Transactions on Instrumentation & Measurement, JCR-Q1, Under review)

- A conference-type paper. (Accepted at the 22nd IEEE International Conference on Industrial Informatics (IEEE-INDIN 2024))

Project Showcase

Robot used for experiments:

.jpg)

Front |

.jpg)

Back |

Experimental processing and results:

Since the relevant papers are still under review, some experimental results are present here:

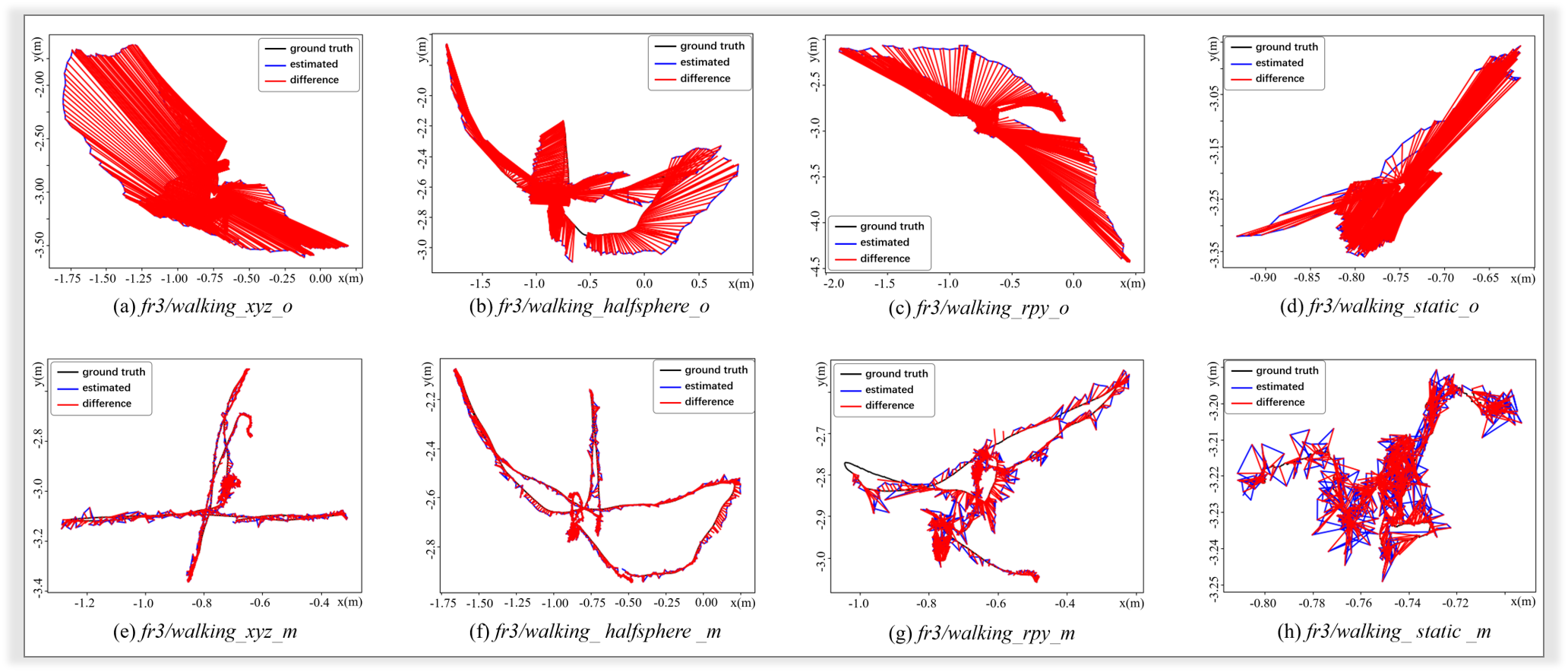

Experimental results of TUM dataset:

ATE results of ORB SLAM2 and the proposed system running four sequences. (a) – (d) are trajectories generated by ORB_SLAM2 under four dynamic data sequences while (e) – (h) are results generated by the proposed system using the same corresponding data.

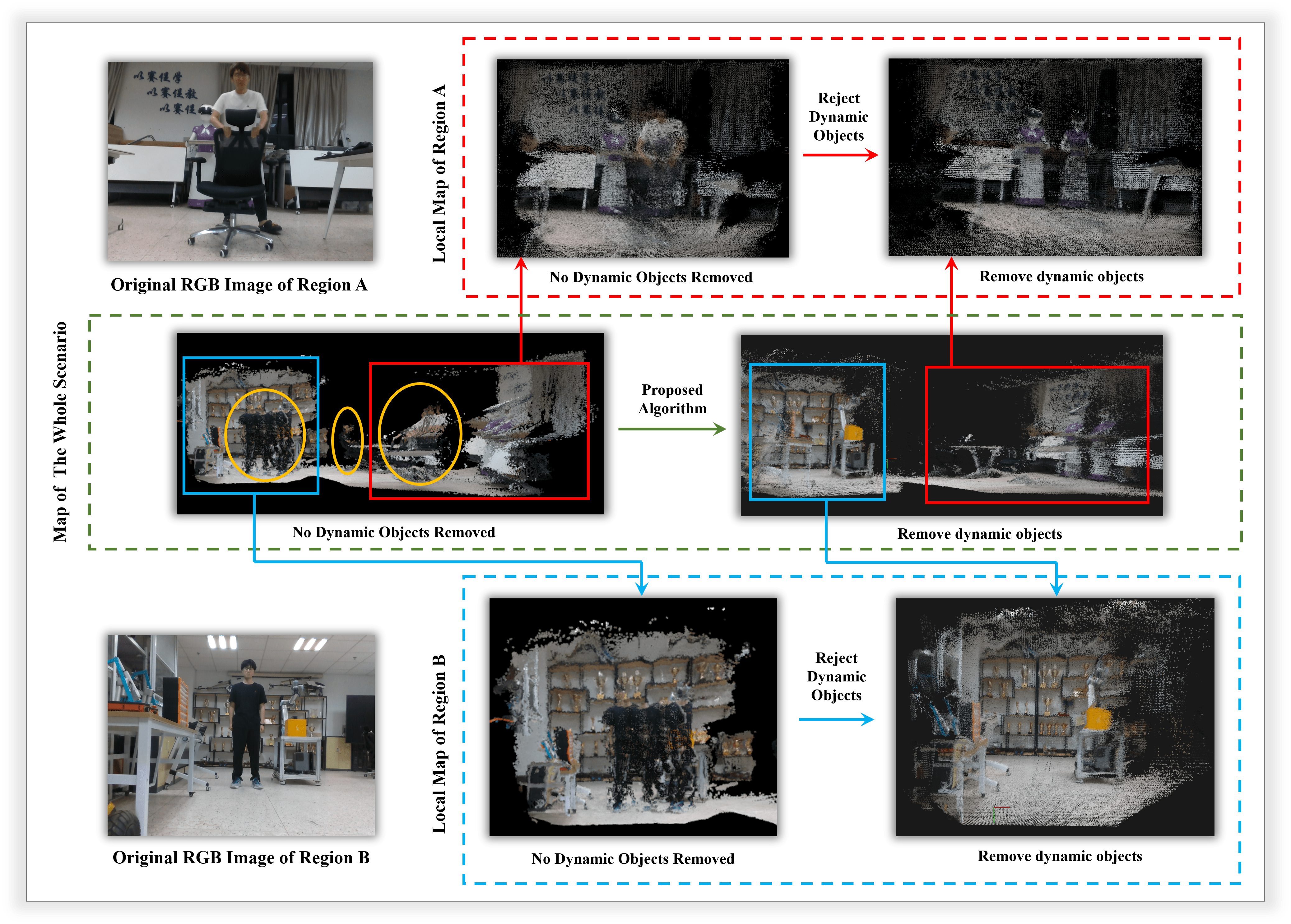

Experimental results of map creation in practice:

The comparison of the dense point cloud mapping results in practical environment. The map of whole scenario is marked with a green dashed box. Regions A and B are marked with red and blue dash boxes respectively. Excluded dynamic point clouds are marked with yellow circles.